Releasing PAWS and PAWS-X: Two New Datasets to Improve Natural Language Understanding Models

Word order and syntactic structure have a large impact on sentence meaning — even small perturbations in word order can completely change interpretation. For example, consider the following related sentences:

- Flights from New York to Florida.

- Flights to Florida from New York.

- Flights from Florida to New York.

All three have the same set of words. However, 1 and 2 have the same meaning — known as paraphrase pairs — while 1 and 3 have very different meanings — known as non-paraphrase pairs. The task of identifying whether pairs are paraphrase or not is called paraphrase identification, and this task is important to many real-world natural language understanding (NLU) applications such as question answering. Perhaps surprisingly, even state-of-the-art models, like BERT, would fail to correctly identify the difference between many non-paraphrase pairs like 1 and 3 above if trained only on existing NLU datasets. This is because existing datasets lack training pairs like this, so it is hard for machine learning models to learn this pattern even if they have the capability to understand complex contextual phrasings.

To address this, we are releasing two new datasets for use in the research community: Paraphrase Adversaries from Word Scrambling (PAWS) in English, and PAWS-X, an extension of the PAWS dataset to six typologically distinct languages: French, Spanish, German, Chinese, Japanese, and Korean. Both datasets contain well-formed sentence pairs with high lexical overlap, in which about half of the pairs are paraphrase and others are not. Including new pairs in training data for state-of-the-art models improves their accuracy on this problem from <50% to 85-90%. In contrast, models that do not capture non-local contextual information fail even with new training examples. The new datasets therefore provide an effective instrument for measuring the sensitivity of models to word order and structure.

The PAWS dataset contains 108,463 human-labeled pairs in English, sourced from Quora Question Pairs (QQP) and Wikipedia pages. PAWS-X contains 23,659 human translated PAWS evaluation pairs and 296,406 machine translated training pairs. The table below gives detailed statistics of the datasets.

| PAWS | PAWS-X | |||||||

| Language | English | English | Chinese | French | German | Japanese | Korean | Spanish |

| (QQP) | (Wiki) | (Wiki) | (Wiki) | (Wiki) | (Wiki) | (Wiki) | (Wiki) | |

| Training | 11,988 | 79,798 | 49,401† | 49,401† | 49,401† | 49,401† | 49,401† | 49,401† |

| Dev | 677 | 8,000 | 1,984 | 1,992 | 1,932 | 1,980 | 1,965 | 1,962 |

| Test | – | 8,000 | 1,975 | 1,985 | 1,967 | 1,946 | 1,972 | 1,999 |

| † The training set of PAWS-X is machine translated from a subset of the PAWS Wiki dataset in English. |

Creating the PAWS Dataset in English

In “PAWS: Paraphrase Adversaries from Word Scrambling,” we introduce a workflow for generating pairs of sentences that have high word overlap, but which are balanced with respect to whether they are paraphrases or not. To generate examples, source sentences are first passed to a specialized language model that creates word-swapped variants that are still semantically meaningful, but ambiguous as to whether they are paraphrase pairs or not. These were then judged by human raters for grammaticality and then multiple raters judged whether they were paraphrases of each other.

|

| PAWS corpus creation workflow. |

One problem with this swapping strategy is that it tends to produce pairs that aren’t paraphrases (e.g., “why do bad things happen to good people” != “why do good things happen to bad people“). In order to ensure balance between paraphrases and non-paraphrases, we added other examples based on back-translation. Back-translation has the opposite bias as it tends to preserve meaning while changing word order and word choice. These two strategies lead to PAWS being balanced overall, especially for the Wikipedia portion.

Creating the Multilingual PAWS-X Dataset

After creating PAWS, we extended it to six more languages: Chinese, French, German, Korean, Japanese, and Spanish. We hired human translators to translate the development and test sets, and used a neural machine translation (NMT) service to translate the training set.

We obtained human translations (native speakers) on a random sample of 4,000 sentence pairs from the PAWS development set for each of the six languages (48,000 translations). Each sentence in a pair is presented independently so that translation is not affected by context. A randomly sampled subset was validated by a second worker. The final dataset has less than 5% word level error rate.

Note, we allowed professionals to not translate a sentence if it was incomplete or ambiguous. On average, less than 2% of the pairs were not translated, and we simply excluded them. The final translated pairs are split then into new development and test sets, ~2,000 pairs for each.

|

| Examples of human translated pairs for German(de) and Chinese(zh). |

Language Understanding with PAWS and PAWS-X

We train multiple models on the created dataset and measure the classification accuracy on the eval set. When trained with PAWS, strong models, such as BERT and DIIN, show remarkable improvement over when they are trained on the existing Quora Question Pairs (QQP) dataset. For example, on the PAWS data sourced from QQP (PAWS-QQP), BERT gets only 33.5 accuracy if trained on existing QQP, but it recovers to 83.1 accuracy when given PAWS training examples. Unlike BERT, a simple Bag-of-Words (BOW) model fails to learn from PAWS training examples, demonstrating its weakness at capturing non-local contextual information. These results demonstrate that PAWS effectively measures sensitivity of models to word order and structure.

|

| Accuracy on PAWS-QQP Eval Set (English). |

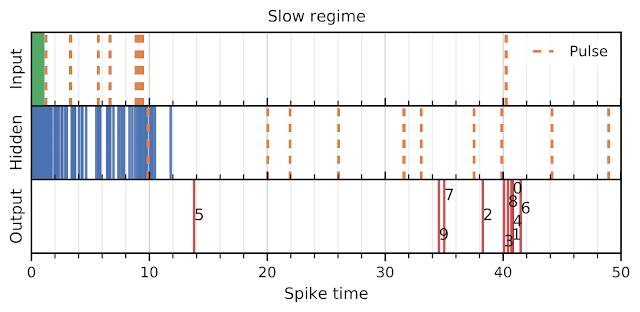

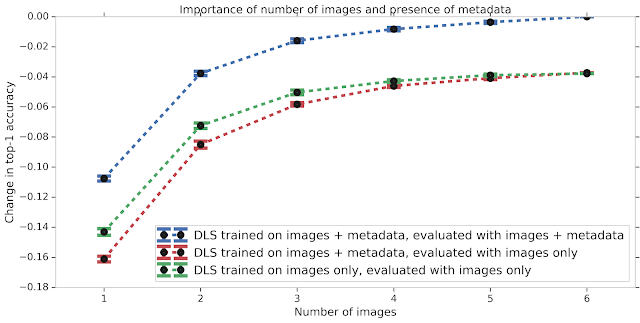

The figure below shows the performance of the popular multilingual BERT model on PAWS-X using several common strategies:

- Zero Shot: The model is trained on the PAWS English training data, and then directly evaluated on all others. Machine translation is not involved in this strategy.

- Translate Test: Train a model using the English training data, and machine-translate all test examples to English for evaluation.

- Translate Train: The English training data is machine-translated into each target language to provide data to train each model.

- Merged: Train a multilingual model on all languages, including the original English pairs and machine-translated data in all other languages.

The results show that cross-lingual techniques help, while it also leaves considerable headroom to drive multilingual research on the problem of paraphrase identification

|

| Accuracy of PAWS-X Test Set using BERT Models. |

It is our hope that these datasets will be useful to the research community to drive further progress on multilingual models that better exploit structure, context, and pairwise comparisons.

Acknowledgements

The core team includes Luheng He, Jason Baldridge, Chris Tar. We would like to thank the Language team in Google Research, especially Emily Pitler, for the insightful comments that contributed to our papers. Many thanks also to Ashwin Kakarla, Henry Jicha, and Mengmeng Niu, for the help with the annotations.