Introducing the Schema-Guided Dialogue Dataset for Conversational Assistants

Today’s virtual assistants help users to accomplish a wide variety of tasks, including finding flights, searching for nearby events and movies, making reservations, sourcing information from the web and more. They provide this functionality by offering a unified natural language interface to a wide variety of services across the web. Large-scale virtual assistants, like Google Assistant, need to integrate with a large and constantly increasing number of services, each with potentially overlapping functionality, over a wide variety of domains. Supporting new services with ease, without collection of additional data or retraining the model, and reducing maintenance workload are necessary to accommodate future growth. Despite tremendous progress, however, these challenges have often been overlooked in state-of-the-art models. This is due, in part, to the absence of suitable datasets that match the scale and complexity confronted by such virtual assistants.

In our recent paper, “Towards Scalable Multi-domain Conversational Agents: The Schema-Guided Dialogue Dataset”, we introduce a new dataset to address these problems. The Schema-Guided Dialogue dataset (SGD) is the largest publicly available corpus of task-oriented dialogues, with over 18,000 dialogues spanning 17 domains. Equipped with various annotations, this dataset is designed to serve as an effective testbed for intent prediction, slot filling, state tracking (i.e., estimating the user’s goal) and language generation, among other tasks for large-scale virtual assistants. We also propose a schema-guided approach for building virtual assistants as a solution to the aforementioned challenges. Our approach utilizes a single model across all services and domains, with no domain-specific parameters. Based on the schema-guided approach and building on the power of pre-trained language models like BERT, we open source a model for dialogue state tracking, which is applicable in a zero-shot setting (i.e., with no training data for new services and APIs) while remaining competitive in the regular setting.

The Dataset

The primary goal of releasing the SGD dataset is to confront many real-world challenges that are not sufficiently captured by existing datasets. The SGD dataset consists of over 18k annotated multi-domain, task-oriented conversations between a human and a virtual assistant. These conversations involve interactions with services and APIs spanning 17 domains, ranging from banks and events to media, calendar, travel, and weather. For most of these domains, the SGD dataset contains multiple different APIs, many of which have overlapping functionalities but different interfaces, which reflects common real-world scenarios. SGD is the first dataset to cover such a wide variety of domains and provide multiple APIs per domain. Furthermore, to quantify the robustness of models to changes in API interfaces or to the addition of new APIs, the evaluation set contains many new services that are not present in the training set.

For the creation of the SGD dataset, we have prioritized the variety and accuracy of annotations in the included dialogues. To begin with, dialogues were collected by interaction between two people using a Wizard-of-Oz style process, followed by crowdsourced annotation. Initial efforts revealed the difficulty in obtaining consistent annotations using this method, so we developed a new data collection process that minimized the need for complex manual annotation, and considerably reduced the time and cost of data collection.

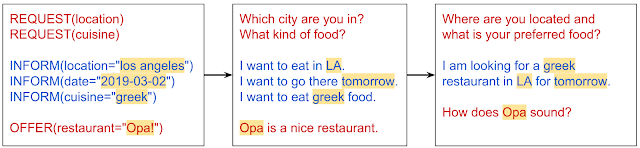

For this alternate approach, we developed a multi-domain dialogue simulator that generates dialogue skeletons over an arbitrary combination of APIs, along with the corresponding annotations, such as dialogue state and system actions. The simulator consists of two agents playing the role of the user and the assistant. Both the agents interact with each other using a finite set of actions denoting dialogue semantics with transitions specified through a probabilistic automaton, designed to capture a wide variety of dialogue trajectories. The actions generated by the simulator are converted into natural language utterances using a set of templates. Crowdsourcing is used only for paraphrasing these templatized utterances in order to make the dialogue more natural and coherent. This setup eliminates the need for complicated domain-specific instructions while keeping the crowdsourcing task simple and yields natural dialogues with consistent, high quality annotations.

The Schema-Guided Approach

With the availability of the SGD dataset, it is now possible to train virtual assistants to support the diversity of services available on the web. A common approach to do this requires a large master schema that lists all supported functions and their parameters. However, it is difficult to develop a master schema catering to all possible use cases. Even if that problem is solved, a master schema would complicate integration of new or small-scale services and would increase the maintenance workload of the assistant. Furthermore, while there are many similar concepts across services that can be jointly modeled, for example, the similarities in logic for querying or specifying the number of movie tickets, flight tickets or concert tickets, the master schema approach does not facilitate joint modeling of such concepts, unless an explicit mapping between them is manually defined.

The new schema-guided approach we propose addresses these limitations. This approach does not require the definition of a master schema for the assistant. Instead, each service or API provides a natural language description of the functions listed in its schema along with their associated attributes. These descriptions are then used to learn a distributed semantic representation of the schema, which is given as an additional input to the dialogue system. The dialogue system is then implemented as a single unified model, containing no domain or service specific parameters. This unified model facilitates representation of common knowledge between similar concepts in different services, while the use of distributed representations of the schema makes it possible to operate over new services that are not present in the training data. We have implemented this approach in our open-sourced dialogue state tracking model.

Eighth Dialogue System Technology Challenge

The Dialog System Technology Challenges (DSTCs) are a series of research competitions to accelerate the development of new dialogue technologies. This year, Google organized one of the tracks, “Schema-Guided Dialogue State Tracking“, as part of the recently concluded 8th DSTC. We received submissions from a total of 25 teams from both industry and academia, which will be presented at the DSTC8 workshop at AAAI-20.

We believe that this dataset will act as a good benchmark for building large-scale dialogue models. We are excited and looking forward to all the innovative ways in which the research community will use it for the advancement of dialogue technologies.

Acknowledgements

This post reflects the work of our co-authors Xiaoxue Zang, Srinivas Sunkara and Raghav Gupta. We also thank Amir Fayazi and Maria Wang for help with data collection and Guan-Lin Chao for insights on model design and implementation.