Now for the Soft Part: For AI, Hardware’s Just the Start, NVIDIA’s Ian Buck Says

Great processors — and great hardware — won’t be enough to propel the AI revolution forward, Ian Buck, vice president and general manager of NVIDIA’s accelerated computing business, said Wednesday at the AI Hardware Summit.

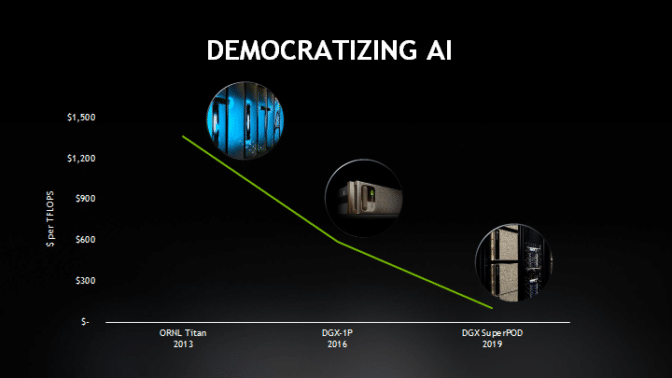

“We’re bringing AI computing way down in cost, way up in capability and I fully expect this trend to continue not just as we advance hardware, but as we advance AI algorithms, AI software and AI applications to help drive the innovation in the industry,” Buck told an audience of hundreds of press, analysts, investors and entrepreneurs in Mountain View, Calif.

Buck — known for creating the CUDA computing platform that puts GPUs to work powering everything from supercomputing to next-generation AI — spoke at a showcase for some of the most iconic computers of Silicon Valley’s past at the Computer History Museum.

AI Training Is a Supercomputing Challenge

The industry now has to think bigger — much bigger — than the boxes that defined the industry’s past, Buck explained, weaving together software, hardware,and infrastructure designed to create supercomputer-class systems with the muscle to harness huge amounts of data.

Training, or creating new AIs able to tackle new tasks, is the ultimate HPC challenge – exposing every bottleneck in compute, networking, and storage, Buck said.

“Scaling AI training poses some hard challenges, not only do you have to build the fast GPU, but optimize for the full data center as the computer,” Buck said. “You have to build system interconnections, memory optimizations, network topology, numerics.”

That’s why NVIDIA is investing in a growing portfolio of data center software and infrastructure, from interconnect technologies such as NVLink and NVSwitch to NVIDIA Collective Communications Library, or NCCL, which optimizes the way data moves across vast systems.

From ResNet-50 to BERT

Kicking off his brisk, half-hour talk, Buck explained that GPU computing has long served the most demanding users — scientists, designers, artists, gamers. More recently that’s included AI. Initial AI applications focused on understanding images, a capability measured by benchmarks such as ResNet-50.

“Fast forward to today, with models like BERT and Megatron that understand human language – this goes way beyond computer vision but actually intelligence,” Buck said. “When I said something, what did I mean? This is a much more challenging problem, it’s really true intelligence that we’re trying to capture in the neural network.”

To help tackle such problems, NVIDIA yesterday announced the latest version of NVIDIA’s inference platform, TensorRT 6. On the T4 GPU, it runs BERT-Large, a model with super-human accuracy for language understanding tasks, in only 5.8 milliseconds, nearly half the 10 ms threshold for smooth interaction with humans. It’s just one part of our ongoing effort to accelerate the end-to-end pipeline.

Accelerating the Full Workflow

Inference tasks, or putting trained AI models to work, are diverse, and usually part of larger applications application that obeys Amdhahl’s Law — if you accelerate only one piece of the pipeline, for example matrix multipliers, you’ll still be limited by the rest of the processing steps.

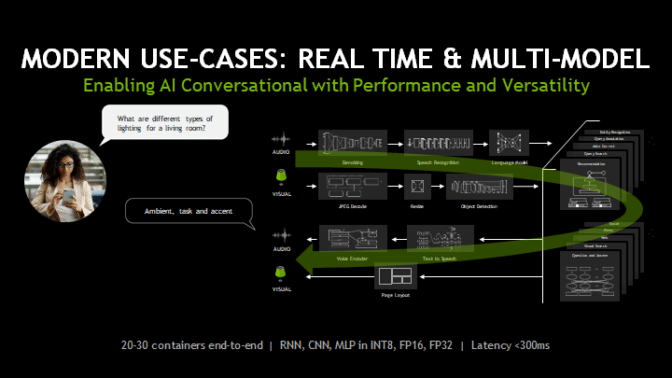

Making an AI that’s truly conversational will require a fully accelerated speech pipeline able to bounce from one crushingly compute-intensive task to another, Buck said.

Such a system could require 20 to 30 containers end to end, harnessing assorted convolutional neural networks and recurrent neural networks made up of multilayer perceptrons working at a mix of precisions, including INT8, FP16 and FP32. All at a latency of less than 300 milliseconds, leaving only 10 ms for a single model.

Data Center TCO Is Driven by Its Utilization

Such performance is vital as investments in data centers will be judged by the amount of utility that can be wrung from their infrastructures, Buck explained. “Total cost of ownership for the hyperscalers is all about utilization,” Buck said. ”NVIDIA having one architecture for all the AI powered use cases drives down the TCO.”

Performance — and flexibility — is why GPUs are already widely deployed in data centers today, Buck said. Consumer internet companies use GPUs to deliver voice search, image search, recommendations, home assistants, news feeds, translations and ecommerce.

Hyperscalers are adopting NVIDIA’s fast, power-efficient T4 GPUs — available on major cloud service providers such as Alibaba, Amazon, Baidu, Google Cloud and Tencent Cloud. And inference is now a double-digit percentage contributor to NVIDIA’s data center revenue.

Vertical Industries Require Vertical Platforms

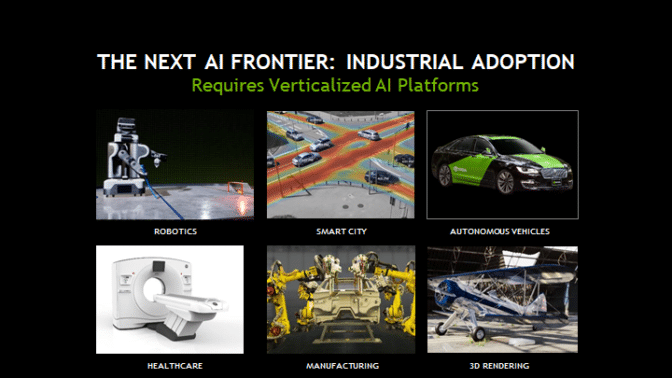

In addition to delivering supercomputing-class computing power for training, and scalable systems for data centers serving hundreds of millions, AI platforms will need to grow increasingly specialized, Buck said.

Today AI research is concentrated in a handful of companies, but broader industry adoption needs verticalized platform, he continued.

“Who is going to do the work,” of building out all those applications? Buck asked. “We need to build domain-specific, verticalized AI platforms, giving them an SDK that gives them a platform that is already tuned for their use cases,” Buck said.

Buck highlighted how NVIDIA is building verticalized platforms for industries such as automotive, healthcare, robotics, smart cities, and 3D rendering, among others.

Zooming in on the auto industry as an example, Buck touched on a half dozen of the major technologies NVIDIA is developing. They include the NVIDIA Xavier system on a chip, NVIDIA Constellation automotive simulation software, NVIDIA DRIVE IX software for in-cockpit AI and NVIDIA DRIVE AV software to help vehicles safely navigate streets and highways.

Wrapping up, Buck offered a simple takeaway: the combination of AI hardware, AI software, and AI infrastructure promise to make more powerful AI available to more industries and, ultimately, more people

“We’re driving down the cost of computing AI, making it more accessible, allowing people to build powerful AI systems and I predict that cost reduction and improved capability will continue far into the future.”

The post Now for the Soft Part: For AI, Hardware’s Just the Start, NVIDIA’s Ian Buck Says appeared first on The Official NVIDIA Blog.