Building Gene Expression Atlases with Deep Generative Models for Single-cell Transcriptomics

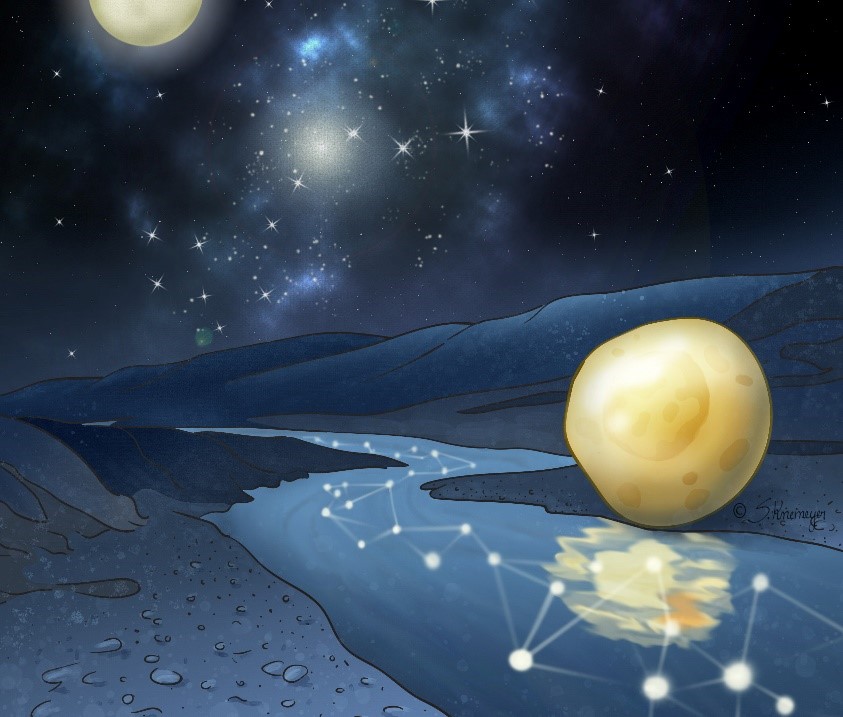

Figure: An artistic representation of single-cell RNA sequencing. The

stars in the sky represent cells in a heterogeneous tissue. The projection of

the stars onto the river reveals relationships among them that are not apparent

by looking directly at the sky. Like the river, our Bayesian model, called scVI,

reveals relationships among cells.

The diversity of gene regulatory states in our body is one of the main reasons

why such an amazing array of biological functions can be encoded in a single

genome. Recent advances in microfluidics and sequencing technologies (such as

inDrops) enabled measurement of gene expression at the single-cell level and has

provided tremendous opportunities to unravel the underlying mechanisms of

relationships between individual genes and specific biological phenomena. These

experiments yield approximate measurements for mRNA counts of the entire

transcriptome (i.e around $d = 20,000$ protein-coding genes) and a large number

of cells $n$, which can vary from tens of thousands to a million cells. The

early computational methods to interpret this data relied on linear model and

empirical Bayes shrinkage approaches due to initially extremely low sample-size.

While current research focuses on providing more accurate models for this gene

expression data, most of the subsequent algorithms either exhibit prohibitive

scalability issues or remain limited to a unique downstream analysis task.

Consequently, common practices in the field still rely on ad-hoc preprocessing

pipelines and specific algorithmic procedures, which limits the capabilities of

capturing the underlying data generating process.

In this post, we propose to build up on the increased sample-size and recent

developments in Bayesian approximate inference to improve modeling complexity as

well as algorithmic scalability. Notably, we present our recent work on deep

generative models for single-cell transcriptomics, which addresses all the

mentioned limitations by formalizing biological questions into statistical

queries over a unique graphical model, tailored to single-cell RNA sequencing

(scRNA-seq) datasets. The resulting algorithmic inference procedure, which we

named Single-cell Variational Inference (scVI), is open-source and

scales to over a million cells.

The scRNA-seq Analysis Era

Previous RNA quantification technologies were only able to collect population

level data, thus biologists could not determine if the differences in their

experiments were due to changes within a single cell type, multiple cell types,

or simply changes in frequencies of cells. Researchers can now dissociate

heterogeneous tissues into single cells, each encapsulated within a droplet with

barcodes that allow for easy sequencing and demultiplexing. After read alignment

procedures, we get a data matrix $x_{ng}$ of counts for expression of gene $g$

in cell $n$.

The standard analysis of this data matrix can be broken down into a number of

sequential tasks. At a high level, we would like to classify cells present in

our sample, which is commonly approached by clustering problem an abstract

latent space. Looking more closely, we want to identify the characteristic

genes for each cell type. , usually achieved via hypothesis testing.

Importantly, for any of these analyses, we must decouple biological signal from

technical factors. In scRNA-seq, technical factors include sequencing depth

(i.e. the number of total transcripts captured in a cell) and batch-effects (i.e

discrepancies between biological replicates). These confounding effects make any

inference problem significantly harder and therefore cannot be addressed by an

off-the-shelf machine learning algorithm.

There is a rich literature in scRNA-seq data analysis that relies on very

diverse machine learning techniques such as nearest-neighbors algorithms (MNNs),

similarity metric learning via kernel methods (SIMLR), matrix factorization

(ZINB-WaVE), and Bayesian non-parametric modeling (BISCUIT). However, all these

approaches have limitations. Namely, one specific algorithm is built to address

a unique problem. Consequently, each algorithm can use its own underlying

hypothesis that may create statistical inconsistencies. Furthermore, these

methods do not scale to more than a few thousand samples — either because of

memory or time complexity — which is an issue for analyzing modern datasets.

Deep Generative Models of Stochastic Gene Expression

As a starting point to a suitable generative model, we identify the different

factors of variability over which we would like to condition the data generating

process for the mRNA counts $x_{ng}$. First, a cell-specific latent variable

$l_n$ represents discrepancies between the number of transcripts captured in

each cell. Second, a dataset-specific factor $s_n$ represents discrepancies

between sequencing protocols or experiments conditions. Third, a cell-specific

latent variable $z_n$ encodes the parameters that governs stochastic gene

expression of the cell. This variable is typically of small dimension and

embeds the cells for downstream analysis (such as clustering).

Notably, following seminal work by Sandrine Dudoit’s group (ZINB-WaVE) we model

the conditional distribution $p(x_{ng} | z_n, l_n, s_n)$ as zero-inflated

negative binomial. Unlike ZINB-WaVE, which relies on linear models, we use deep

neural networks to parametrize these conditional distributions. We refer to our

manuscript for a complete specification of our probabilistic model.

Because the marginal distribution $p(x_n | s_n)$ is not amenable to Bayesian

computations, we rely on auto-encoding variational Bayes to learn an approximate

posterior $q(z_n, l_n | x_n, s_n)$ (Figure 1) and fit our generative model. In

particular, scVI relies on stochastic optimization by sampling from the

variational posterior and from the datasets, which ensures a scalable inference

procedure. scVI can also be used for hypothesis testing by sampling from the

variational posterior and identify gene of interest between cell-types.

Figure 1: Overview of scVI. Given a gene-expression matrix with batch

annotations as input, scVI learns a non-linear embedding of the cells that can

be used for multiple analysis tasks. We draw here the computational trees

(neural networks) used to compute the embedding as well as the distribution of

gene expression.

We refer to our manuscript for an extensive benchmarking study on a set of seven

different datasets and five different tasks (which includes embedding and

hypothesis testing). scVI compares favorably on all the datasets and all the

tasks against state-of-the-art algorithms in each task.

A Gene Expression Atlas of the Mouse Brain from a Million Single-cell Measurements

scVI provides a readily applicable solution for large-scale inference. In

particular, we could fit the 1.3 million cells dataset from 10x Genomics in less

than 1 hour on a small subset of 720 genes and less than 6 hours on a bigger

subset of 10,000 genes. The generative model embeds the data via its posterior

$q(z_n | x_n, s_n)$ (Figure 2) and identifies important genes between cell-types

via hypothesis testing.

Figure 2: tSNE embedding of a subset of cells from the 1.3M cells dataset

based on scVI’s latent space.

Towards more Systematic Integration of Prior Biological Knowledge

From this proof of concept, we are now working on extending this work to

incorporate prior biological knowledge such as integrating several datasets,

using semi-supervised models to annotate the cells, adding hierarchical

structure for cell-type taxonomy, and adding sparsity priors for greater

interpretability of the neural networks weights.

We hope that our approach will inspire the computational biology community to

develop probabilistic-model-based tools that are more consistent and better

grounded than ad-hoc algorithmic procedures.

Please feel free to read our manuscript published in Nature Methods

along with its associated News and Views. We have released code here

through a GitHub project.

Acknowledgements: We are grateful for the contributions of our collaborators:

Edouard Mehlman, Maxime Langevin, Adam Gayoso, Yining Liu, Jules Samaran,

Jeffrey Regier, and Nir Yosef.