2D or Not 2D: NVIDIA Researchers Bring Images to Life with AI

Close your left eye as you look at this screen. Now close your right eye and open your left — you’ll notice that your field of vision shifts depending on which eye you’re using. That’s because while we see in two dimensions, the images captured by your retinas are combined to provide depth and produce a sense of three-dimensionality.

Machine learning models need this same capability so that they can accurately understand image data. NVIDIA researchers have now made this possible by creating a rendering framework called DIB-R — a differentiable interpolation-based renderer — that produces 3D objects from 2D images.

The researchers will present their model this week at the annual Conference on Neural Information Processing Systems (NeurIPS), in Vancouver.

In traditional computer graphics, a pipeline renders a 3D model to a 2D screen. But there’s information to be gained from doing the opposite — a model that could infer a 3D object from a 2D image would be able to perform better object tracking, for example.

NVIDIA researchers wanted to build an architecture that could do this while integrating seamlessly with machine learning techniques. The result, DIB-R, produces high-fidelity rendering by using an encoder-decoder architecture, a type of neural network that transforms input into a feature map or vector that is used to predict specific information such as shape, color, texture and lighting of an image.

It’s especially useful when it comes to fields like robotics. For an autonomous robot to interact safely and efficiently with its environment, it must be able to sense and understand its surroundings. DIB-R could potentially improve those depth perception capabilities.

It takes two days to train the model on a single NVIDIA V100 GPU, whereas it would take several weeks to train without NVIDIA GPUs. At that point, DIB-R can produce a 3D object from a 2D image in less than 100 milliseconds. It does so by altering a polygon sphere — the traditional template that represents a 3D shape. DIB-R alters it to match the real object shape portrayed in the 2D images.

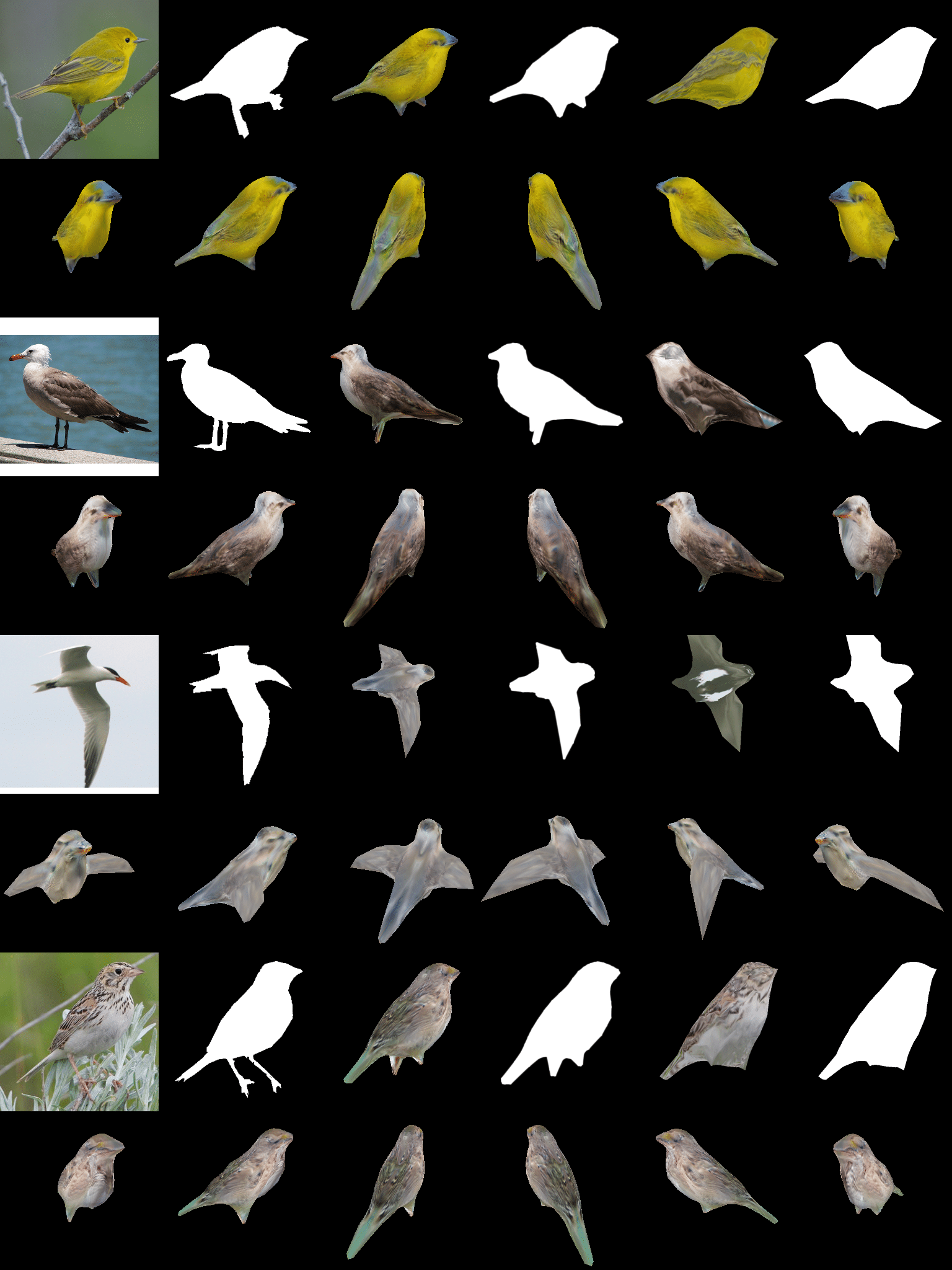

NVIDIA researchers trained their model on several datasets, including a collection of bird images. After training, DIB-R could take an image of a bird and produce a 3D portrayal with the proper shape and texture of a 3D bird.

“This is essentially the first time ever that you can take just about any 2D image and predict relevant 3D properties,” says Jun Gao, one of a team of researchers who collaborated on DIB-R.

DIB-R can transform 2D images of long extinct animals like a Tyrannosaurus rex or chubby Dodo bird into a lifelike 3D image in under a second.

Built on PyTorch, a machine learning framework, DIB-R is included as part of Kaolin, NVIDIA’s newest 3D deep learning PyTorch library that accelerates 3D deep learning research.

The entire NVIDIA research paper, “Learning to Predict 3D Objects with an Interpolation-Based Renderer,” can be found here. The NVIDIA Research team consists of more than 200 scientists around the globe, focusing on areas including AI, computer vision, self-driving cars, robotics and graphics.

The post 2D or Not 2D: NVIDIA Researchers Bring Images to Life with AI appeared first on The Official NVIDIA Blog.