What is TF-IDF in Feature Engineering?

Basic concept of TF-IDF in NLP

The concept TF-IDF stands for term frequency-inverse document frequency. This is in the field of numerical statistics. With this concept, we will be able to decide how important a word is to a given document in the present dataset or corpus.

What is TF-IDF?

TF-IDF indicates what the importance of the word is in order to understand the document or dataset. Let us understand with an example. Suppose you have a dataset where students write an essay on the topic, My House. In this dataset, the word a appears many times; it’s a high frequency word compared to other words in the dataset. The dataset contains other words like home, house, rooms and so on that appear less often, so their frequency are lower and they carry more information compared to the word. This is the intuition behind TF-IDF.

Let us dive deep into the mathematical aspect of TF-IDF. It has two parts: Term Frequency(TF) and Inverse Document Frequency(IDF). The term frequency indicates the frequency of each of the words present in the document or dataset.

So, its equation is given as follows:

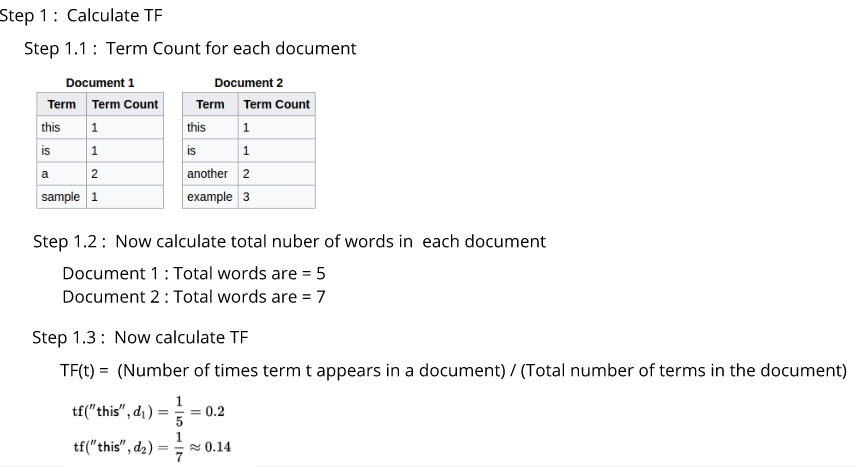

TF(t) = (Number of times term t appears in a document) / (Total number of terms in the document)

The second part is — inverse document frequency. IDF actually tells us how important the word is to the document. This is because when we calculate TF, we give equal importance to every single word. If the word appears in the dataset more frequently, then its term frequency (TF) value is high while not being that important to the document.

So, if the word the appears in the document 100 times, then it’s not carrying that much information compared to words that are less frequent in the dataset. Thus, we need to define some weighing down of the frequent terms while scaling up the rare ones, which decides the importance of each word. We will achieve this with the following equation:

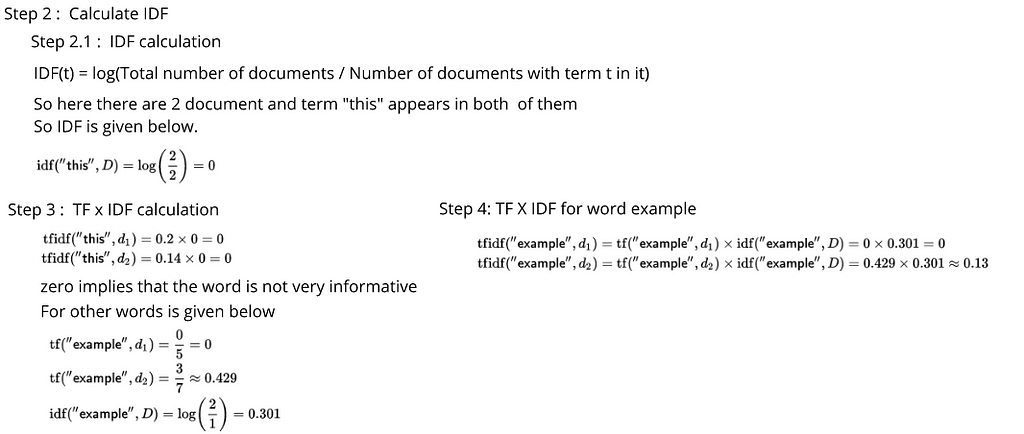

IDF(t) = log10(Total number of documents / Number of documents with term t in it).

Hence, equation is calculate TF-IDF is as follows.

TF * IDF = [ (Number of times term t appears in a document) / (Total number of terms in the document) ] * log10(Total number of documents / Number of documents with term t in it).

In reality, TF-IDF is the multiplication of TF and IDF, such as TF * IDF.

Now, let’s take an example where you have two sentences and are considering those sentences as different documents in order to understand the concept of TF-IDF:

Document 1: This is a sample.

Document 2: This is another example.

In summary, to calculate TF-IDF, we will follow these steps:

1. We first calculate the frequency of each word for each document.

2. We calculate IDF.

3. We multiply TF and IDF.

Subscribe to our Acing AI newsletter, I promise not to spam and its FREE!

Thanks for reading! 😊 If you enjoyed it, test how many times can you hit 👏 in 5 seconds. It’s great cardio for your fingers AND will help other people see the story.

Reference: Python NLP

What is TF-IDF in Feature Engineering? was originally published in Acing AI on Medium, where people are continuing the conversation by highlighting and responding to this story.