ACR AI-LAB and NVIDIA Make AI in Hospitals Easy on IT, Accessible to Every Radiologist

For radiology to benefit from AI, there needs to be easy, consistent and scalable ways for hospital IT departments to implement the technology. It’s a return to a service-oriented architecture, where logical components are separated and can each scale individually, and an efficient use of the additional compute power these tools require.

AI is coming from dozens of vendors as well as internal innovation groups, and needs a place within the hospital network to thrive. That’s why NVIDIA and the American College of Radiology (ACR) have published a Hospital AI Reference Architecture Framework. It helps hospitals easily get started with AI initiatives.

A Cookbook to Make AI Easy

The Hospital AI Reference Architecture Framework was published at yesterday’s annual ACR meeting for public comment. This follows the recent launch of the ACR AI-LAB, which aims to standardize and democratize AI in radiology. The ACR AI-LAB uses infrastructure such as NVIDIA GPUs and the NVIDIA Clara AI toolkit, as well as GE Healthcare’s Edison platform, which helps bring AI from research into FDA-cleared smart devices.

The Hospital AI Reference Architecture Framework outlines how hospitals and researchers can easily get started with AI initiatives. It includes descriptions of the steps required to build and deploy AI systems, and provides guidance on the infrastructure needed for each step.

To drive an effective AI program within a healthcare institution, there must first be an understanding of the workflows involved, compute needs and data required. It comes from a foundation of enabling better insights from patient data with easy-to deploy compute at the edge.

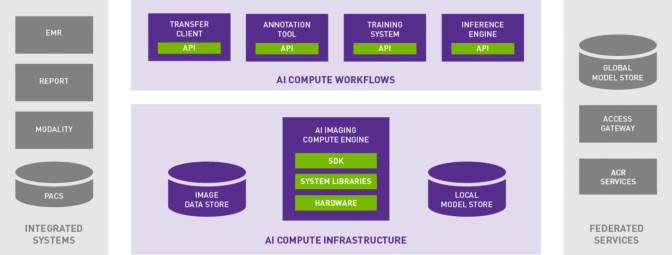

Using a transfer client, seed models can be downloaded from a centralized model store. A clinical champion uses an annotation tool to locally create data that can be used for fine-tuning the seed model or training a new model. Then, using the training system with the annotated data, a localized model is instantiated. Finally, an inference engine is used to conduct validation and ultimately inference on data within the institution.

These four workflows sit atop AI compute infrastructure, which can be accelerated with NVIDIA GPU technology for best performance, alongside storage for models and annotated studies. These workflows tie back into other hospital systems such as PACS, where medical images are archived.

Three Magic Ingredients: Hospital Data, Clinical AI Workflows, AI Computing

Healthcare institutions don’t have to build the systems to deploy AI tools themselves.

This scalable architecture is designed to support and provide computing power to solutions from different sources. GE Healthcare’s Edison platform now uses NVIDIA’s TRT-IS inference capabilities to help AI run in an optimized way within GPU-powered software and medical devices. This integration makes it easier to deliver AI from multiple vendors into clinical workflows — and is the first example of the AI-LAB’s efforts to help hospitals adopt solutions from different vendors.

Together, Edison with TRT-IS offers a ready-made device inferencing platform that is optimized for GPU-compliant AI, so models built anywhere can be deployed in an existing healthcare workflow.

Hospitals and researchers are empowered to embrace AI technologies without building their own standalone technology or yielding their data to the cloud, which has privacy implications.

The post ACR AI-LAB and NVIDIA Make AI in Hospitals Easy on IT, Accessible to Every Radiologist appeared first on The Official NVIDIA Blog.