Capturing Special Video Moments with Google Photos

Recording video of memorable moments to share with friends and loved ones has become commonplace. But as anyone with a sizable video library can tell you, it’s a time consuming task to go through all that raw footage searching for the perfect clips to relive or share with family and friends. Google Photos makes this easier by automatically finding magical moments in your videos—like when your child blows out the candle or when your friend jumps into a pool—and creating animations from them that you can easily share with friends and family.

In “Rethinking the Faster R-CNN Architecture for Temporal Action Localization“, we address some of the challenges behind automating this task, which are due to the complexity of identifying and categorizing actions from a highly variable array of input data, by introducing an improved method to identify the exact location within a video where a given action occurs. Our temporal action localization network (TALNet) draws inspiration from advances in region-based object detection methods such as the Faster R-CNN network. TALNet enables identification of moments with large variation in duration, achieving state-of-the-art performance compared to other methods, allowing Google Photos to recommend the best part of a video for you to share with friends and family.

|

| An example of the detected action “blowing out candles” |

Identifying Actions for Model Training

The first step in identifying magic moments in videos is to assemble a list of actions that people might wish to highlight. Some examples of actions include “blow out birthday candles”, “strike (bowling)”, “cat wags tail”, etc. We then crowdsourced the annotation of segments within a collection of public videos where these specific actions occurred, in order to create a large training dataset. We asked the raters to find and label all moments, accommodating videos that might have several moments. This final annotated dataset was then used to train our model so that it could identify the desired actions in new, unknown videos.

Comparison to Object Detection

The challenge of recognizing these actions belongs to the field of computer vision known as temporal action localization, which, like the more familiar object detection, falls under the umbrella of visual detection problems. Given a long, untrimmed video as input, temporal action localization aims to identify the start and end times, as well as the action label (like “blowing out candles”), for each action instance in the full video. While object detection aims to produce spatial bounding boxes around an object in a 2D image, temporal action localization aims to produce temporal segments including an action in a 1D sequence of video frames.

Our approach to TALNet is inspired by the faster R-CNN object detection framework for 2D images. So, to understand TALNet, it is useful to first understand faster R-CNN. The figure below demonstrates how the faster R-CNN architecture is used for object detection. The first step is to generate a set of object proposals, regions of the image that can be used for classification. To do this, an input image is first converted into a 2D feature map by a convolutional neural network (CNN). The region proposal network then generates bounding boxes around candidate objects. These boxes are generated at multiple scales in order to capture the large variability in objects’ sizes in natural images. With the object proposals now defined, the subjects in the bounding boxes are then classified by a deep neural network (DNN) into specific objects, such as “person”, “bike”, etc.

|

| Faster R-CNN architecture for object detection |

Temporal Action Localization

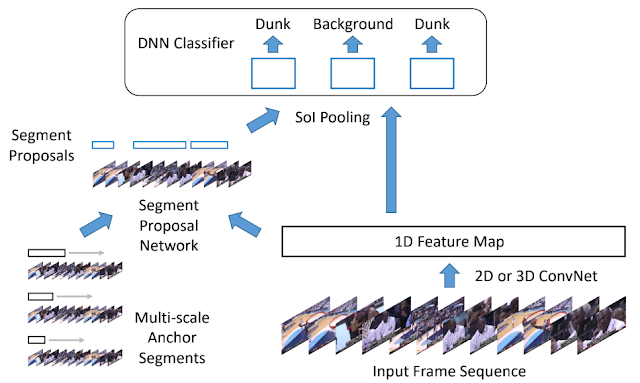

Temporal action localization is accomplished in a fashion similar to that used by R-CNN. A sequence of input frames from a video are first converted into a sequence of 1D feature maps that encode scene context. This map is passed to a segment proposal network that generates candidate segments, each defined by start and end times. A DNN then applies the representations learned from the training dataset to classify the actions in the proposed video segments (e.g., “slam dunk”, “pass”, etc.). The actions identified in each segment are given weights according to their learned representations, with the top scoring moment selected to share with the user.

|

| Architecture for temporal action localization |

Special Considerations for Temporal Action Localization

While temporal action localization can be viewed as the 1D counterpart of the object detection problem, care must be taken to address a number of issues unique to action localization. In particular, we address three specific issues in order to apply the Faster R-CNN approach to the action localization domain, and redesign the architecture to specifically address them.

- Actions have much larger variations in durations

The temporal extent of actions varies dramatically—from a fraction of a second to minutes. For long actions, it is not important to understand each and every frame of the action. Instead, we can get a better handle on the action by skimming quickly through the video, using dilated temporal convolutions. This approach allows TALNet to search the video for temporal patterns, while skipping over alternate frames based on a given dilation rate. Analysing the video with several different rates that are selected automatically according to the anchor segment’s length enables efficient identification of actions as large as the entire video or as short as a second. - The context before and after an action are important

The moments preceding and following an action instance contain critical information for localization and classification, arguably more so than the spatial context of an object. Therefore, we explicitly encode the temporal context by extending the length of proposal segments on both the left and right by a fixed percentage of the segment’s length in both the proposal generation stage and the classification stage. - Actions require multi-modal input

Actions are defined by appearance, motion and sometimes even audio information. Therefore, it is important to consider multiple modalities of features for the best results. We use a late fusion scheme for both the proposal generation network and the classification network, in which each modality has a separate proposal generation network whose outputs are combined together to obtain the final set of proposals. These proposals are classified using separate classification networks for each modality, which are then averaged to obtain the final predictions.

TALNet in Action

As a consequence of these improvements, TALNet achieves state-of-the-art performance for both action proposal and action localization tasks on the THUMOS’14 detection benchmark and competitive performance on the ActivityNet challenge. Now, whenever people save videos to Google Photos, our model identifies these moments and creates animations to share. Here are a few examples shared by our initial testers.

|

| An example of the detected action “sliding down a slide” |

|

| An example of the detected actions “jump into the pool” (left), “twirl in a dress” (center) and “feed baby a spoonful” (right). |

Next steps

We are continuing work to improve the precision and recall of action localization using more data, features and models. Improvements in temporal action localization can drive progress on a large number of important topics ranging from video highlights, video summarization, search and more. We hope to continue improving the state-of-the-art in this domain and at the same time provide more ways for people to reminisce on their memories, big and small.

Acknowledgements

Special thanks Tim Novikoff and Yu-Wei Chao, as well as Bryan Seybold, Lily Kharevych, Siyu Gu, Tracy Gu, Tracy Utley, Yael Marzan, Jingyu Cui, Balakrishnan Varadarajan, Paul Natsev for their critical contributions to this project.